I must have been in the 7th or 8th grade when, in the English class, we started talking about something called “plot”. It was a Hemingway short story, as far as I can remember, and we were looking at the elements which form a story. I was pretty familiar with the concepts of character and setting, but this “plot” was something new for me. At that age, I was swallowing books without giving much thought to what it is that makes a story. Things, of course, got more complicated since then. Looking back at that moment in time, I believe now that it laid the basis for myself as a human being and a member of society. A framework was being knit together, within which I eventually started thinking of myself.

Plot is the prelude to sequence. Over the course of the story, it builds a continuity of events, like a closed string with its pearls all neatly following each other. They hold a grip on you by creating the illusion that how they are arranged is a given, that one pearl naturally follows the other. There is indeed something inevitable about the events that make up a story, just as it is for the character they shape. Yet, at the same time, there is always room for a certain randomness, that moment before the cards are dealt. There is no story without logical continuity, but the characters do draw a degree of freedom from the plot.

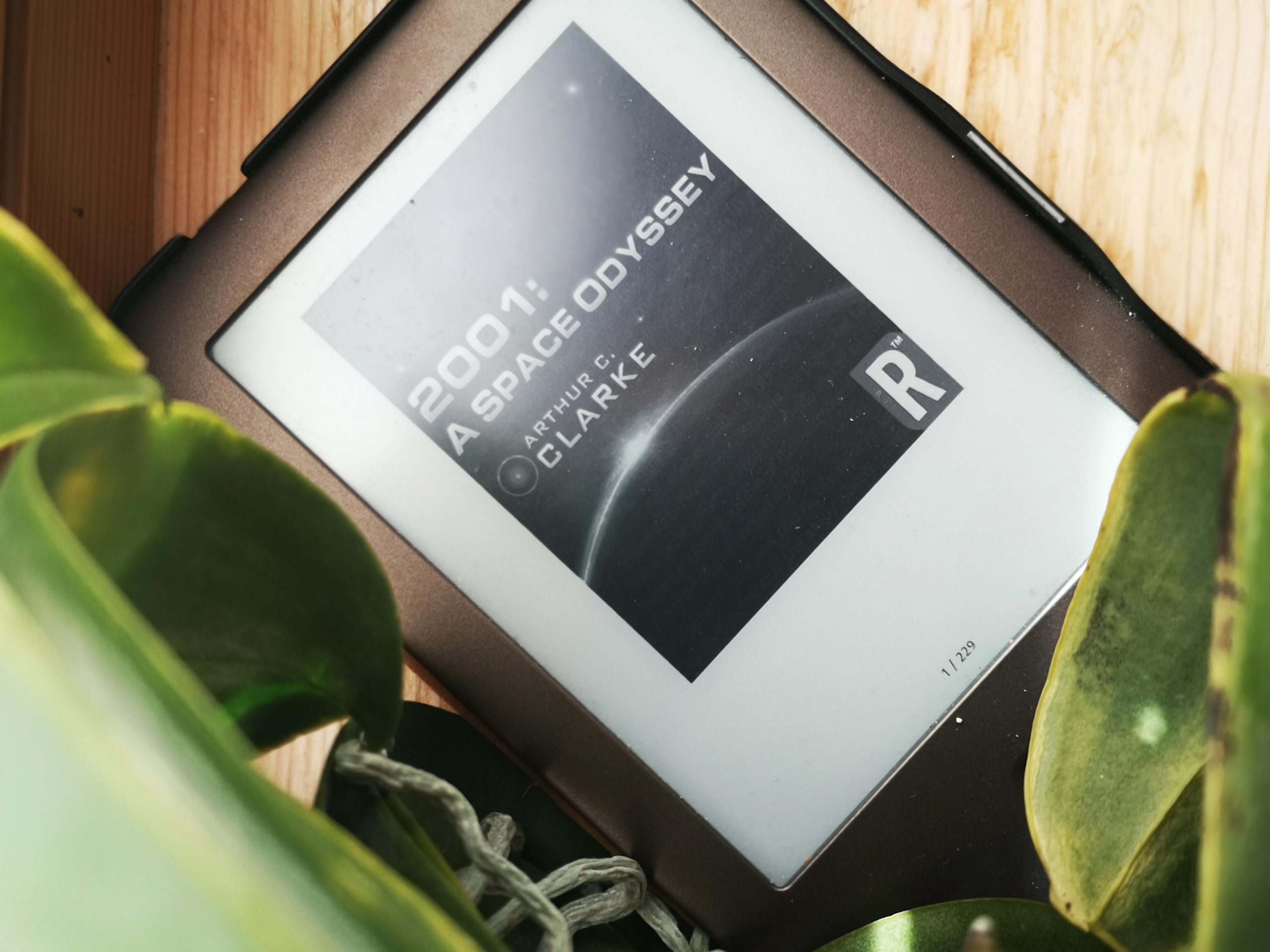

In 2001 A Space Odyssey, Clarke makes it too clear why Hal, the AI which controls Discovery, went berserk. We are told it is because he had to process information which went contrary to his nature as AI. I find it to be a small literary tragedy that Clarke resorted to explaining. He serves the reader the objective reason which drove Hal to kill almost the entire crew, but we would have gained more insight had it been Hal himself or even Bowman who had told the story. Maybe this would have deepened and carved out the other why which made Hal turn on the crew. The “programming conflict” within his circuits is one thing, but the whole string of events that starts with Hal lying about the AE-35 unit and ends with him opening the airlock can only make sense if he can contemplate himself both as AI, and as self. If he has self-consciousness as AI, that is, and knows it.

Looking at the novel this way, Hal’s artificial consciousness is both the conclusion and the reason for the choices he makes. He is affected by “the conflict between truth, and the concealment of truth”, Clarke writes. In other words, his programmers force Hal to come to terms with a moral dilemma without giving him the tools to reconcile it. So what does he do? Like a human faced with similar ethical problems, he steps out of the narrative other people entangled him in and tells himself a story about himself and his failure.

His story starts with the false prediction about the failure of the first AE-35 unit. According to Clarke’s omniscient narrator, Hal was already malfunctioning because he couldn’t reveal to the crew members the truth of the mission. But if we admit Hal’s self-reflective capacity, we could suspect that he was trying to give the humans a chance to stop him from hijacking the mission, which, after all, he had utmost “enthusiasm” for. His intention doesn’t stick because the crew members blindly believe in Hal’s incapacity to make errors and take his word for it when he interrupts communication with Earth under the pretense that the second AE-35 unit broke. They look at Hal as the machine he is, without examining the other side of his story: his AI self-awareness, which, like in the case of humans, pushes him to self-preservation.

The chance Hal gives the crew is one-time only. When he faces disconnection because of his malfunction (or, as I see it, of his unconscious, selfless attempt to rescue the mission), self-preservation comes above anything else. He doesn’t break communication with Earth as yet another “malfunction” but does it to prevent himself from facing the truth that he’s been lying to the crew. If Earth is his conscience, then, as a good “human”, that’s something he doesn’t want to deal with.

The downward spiral Hal entangles himself in reaches an unavoidable bottom: murder. He kills Poole to cover his previous lie that the second AE-35 malfunctioned, and, in the end, he kills almost everyone to protect himself from being shut down. Hal’s initial benevolence towards humans and his “enthusiasm for the mission” are all shed when his own existence is at stake. This is, after all, what an entity aware of its own existence would do. And so, we come full circle on the string of pearls. Hal can prioritize the humans and the mission, but not above anything else.

All of this, of course, could be simply explained as “malfunction”, but there’s no poetry in that. And if we admit an AI has self-consciousness, there has to be meaning to its decisions. As we see in Hal’s case, his decisions protect what he most cares about: either the mission or himself.

In an article in The Critic, Sean Walsh suggests that storytelling is how Rick Deckard copes with his task of “retiring” androids in Philip K. Dick’s Do Androids Dream of Electric Sheep. According to Walsh, Deckard also faces a contradiction. He acknowledges that androids are sentient, yet he must terminate them, which, in the human world, would make him a killer. To reconcile this moral dilemma, Deckard contemplates himself being an android. Deckard and Hal stand on completely opposite sides in their predicaments, but they are both faced with contradictions that nobody gave them the tools to resolve.

If the story I told in this blog post makes sense or has any meaning is for the reader to decide. I suggest that Hal is sentient because he is capable of looking at himself from the outside and telling himself a meaningful story about himself. The trick of course is that meaning, like beauty, is in the eye of the beholder. For me, the events in 2001 A Space Odyssey mean that Hal is self-aware. For Clarke’s omniscient narrator, AI self-awareness is a given and Hal’s decisions point to a malfunction. Elsewhere in the real world, an engineer’s conversation with AI meant for him a new milestone in the history of humanity. All we have is raw plot. Story and meaning are for us to decipher.

your thoughts?